Why smart buildings need a new approach to data collection

For years, the standard approach to smart buildings has been to gather data into automation systems, push it to the cloud via APIs, and rely on layers of sensors and IoT devices to fill in the gaps. This method became best practice not because it was perfect, but because it was the only viable way to extract even some meaningful data from buildings and it made sense as a natural extension of traditional process automation.

While this approach might check some of the boxes for data-driven, I don’t believe it’s truly intelligent. Today, we have better ways to achieve true intelligence without the complexity, cost, and limitations of the old model.

Three reasons why this isn’t the smartest infrastructure for getting data

1. Price

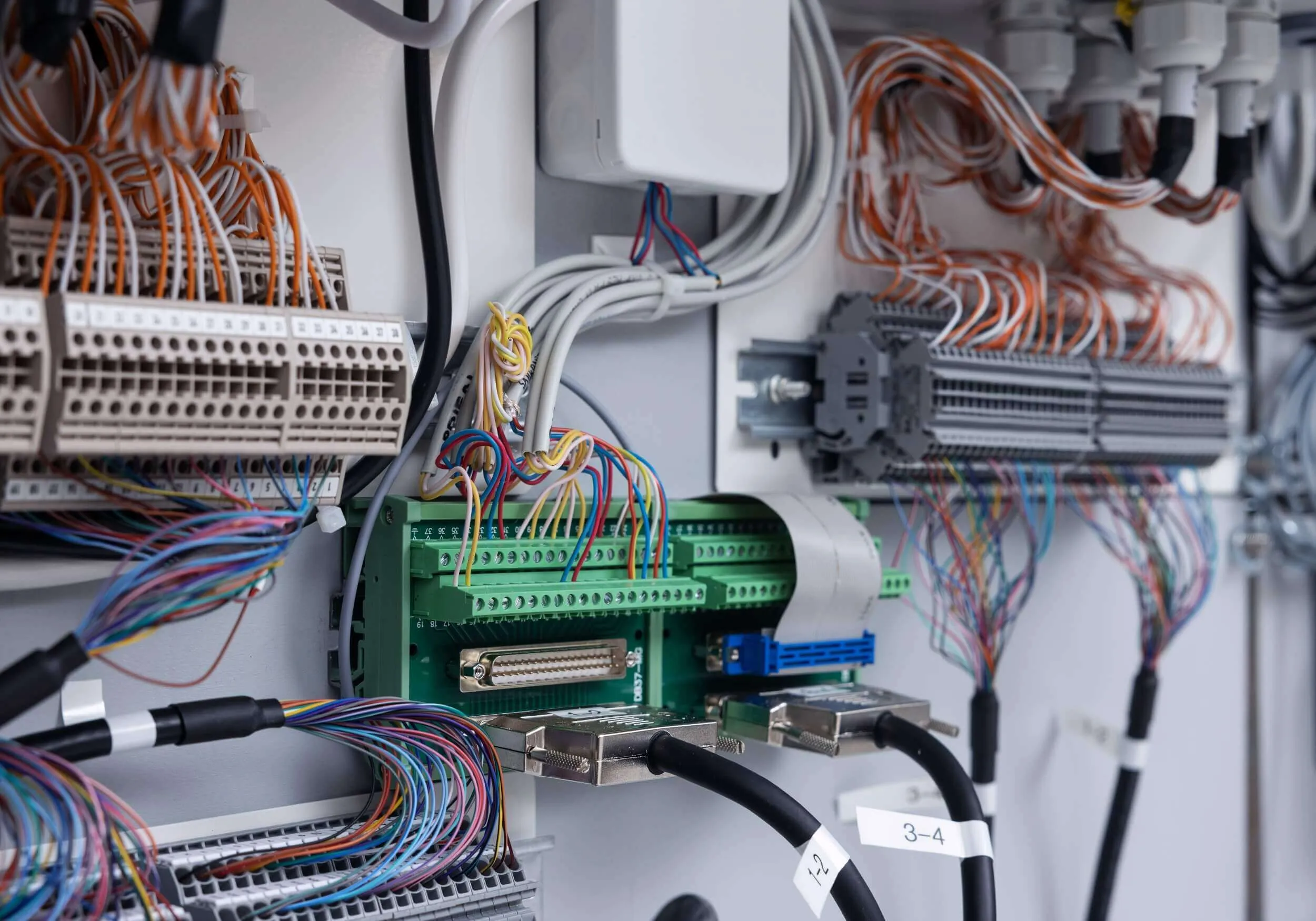

Inside the building: Custom integrations, like specialized cabling, automation coding, and proprietary protocols quickly inflate expenses. You’re not just paying for the hardware; you’re paying for the labor to stitch it all together. Automation systems often have limits on data points, forcing you to deploy multiple automation centers just to cover all your needs. That means more physical space, more maintenance, and more capital expenditure.

In the cloud: The costs don’t stop at the building’s edge. Cloud level API integrations require additional custom work, and because these systems are typically vendor-locked, you’re stuck with their pricing. Need to add a new sensor or device? You can’t just pick the most affordable or best-performing option, you’re limited to what’s compatible with your existing setup. Over time, this lack of flexibility drives up costs, as you’re constantly paying premiums for proprietary solutions or workarounds.

This fragmented approach doesn’t just hit your wallet today, it creates ongoing financial drag. Every new integration, every upgrade, and every expansion becomes a negotiation with vendors, a custom project, or a compromise on performance. The result? A system that’s expensive to build, costly to maintain, and inflexible to adapt as your needs evolve.

2. Flexibility

Once you’ve painstakingly set up numerous local and cloud API integrations, what happens when things inevitably change? What if a field device needs replacement? What about tenant improvements, new systems, or the installation of new sensors? Change is a constant in building technology. With this rigid setup, you’re condemned to a continuous race of updating local connections, local automation coding, and then updating the cloud integrations.

Many of us (especially those with a bit more years under their belt) remember this integration nightmare from the nineties. It’s expensive, time-consuming, and because buildings involve physical devices, you’re almost always in a situation where something will be overlooked or left undone due to this inflexible approach to integration and data collection.

3. Data quality

Even the most meticulously planned automation systems leave critical gaps in your data and those gaps only widen over time since there is no way to store historical data that has not been programmed there. At the start of any project, it’s impossible to anticipate every future need. Will you need granular energy consumption data by zone next year? Real-time occupancy patterns for space optimization? Predictive maintenance insights for HVAC? Because custom integrations and development work are so costly and time-consuming, buildings often end up with static data sets that reflect yesterday’s priorities, not tomorrow’s opportunities. By the time you realize what’s missing, the system is already locked in, and updating it means more expense, more downtime, and more compromise.

But the bigger issue isn’t just missing data. It’s that the data you do collect often isn’t fit for purpose. Raw operational data from traditional automation systems is designed for basic control, not for advanced applications. It lacks the depth, context, and real-time responsiveness that modern analytics and AI demand. Try feeding that into a machine learning model or a predictive analytics tool, and you’ll quickly hit limits. Garbage in, garbage out isn’t just a cliché; it’s the reality when your data infrastructure wasn’t built for intelligence from the ground up.

Keep looking forward, not backward

Real estate has largely been left behind in digitalization, partly due to industry dynamics but primarily because of a lack of robust data infrastructure. If we want to bring real estate into the 2020s, we need the same data infrastructure that other industries already possess. This cannot be built on process automation tools; instead, we require modern data processing capabilities, directly learned from the IT sector. We have the opportunity to take a giant leap from the 1970s to 2025—there’s no need to simply take a small step to where other industries were in 1990.